More needles, bigger haystacks: What we mean when we talk about data-driven VC

Finding the right deals in VC is a daily game of finding a needle in a haystack – or, more accurately, a lot of needles in a lot of haystacks.

Finding the right deals in VC is a daily game of finding a needle in a haystack – or, more accurately, a lot of needles in a lot of haystacks.

Investors have long been doing this manually: trawling the internet, sweating their networks and following up on cold inbounds, hoping to find new opportunities before everyone else. It’s a large part of the enjoyment of the job – but also the biggest time sink. This manual data collection limits the ground investors can cover, how fast they can cover it, and prevents the best possible fit between startup and investor.

Now, there’s a shift towards data-driven sourcing, screening and evaluation – using data to find and pick winners faster. That’s what we’re doing at Moonfire.

By scraping websites and connecting up to APIs, we automate most of our sourcing – while simultaneously casting the net much wider – and then use machine learning models to screen for the companies we think are a good fit for us, before passing these on to our investors to evaluate. Mike and I wrote recently about how we use large language models to find companies that fit our investment thesis. Today, we’ll shed some light on how these models fit into the bigger picture.

Running with the haystack metaphor, here’s how we use automation and machine learning to find more needles in bigger haystacks, and save our investors from picking through the chaff.

Making hay

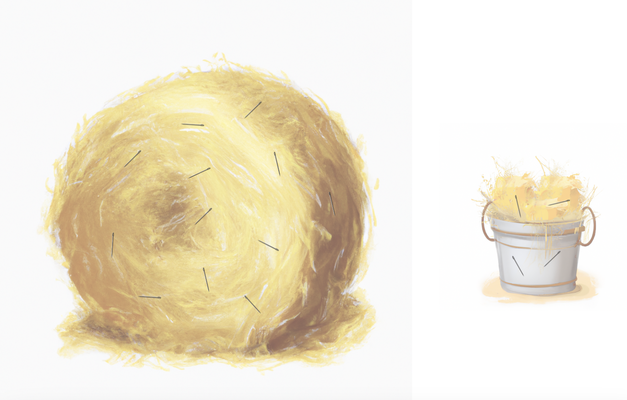

We start by making hay. That’s the sourcing. We identify companies from various sources, like Crunchbase, YCombinator, Twitter and many more. Depending on the source, this means scraping websites or connecting up to an API for the data. This gives us our haystack: the companies we’ve sourced that week.

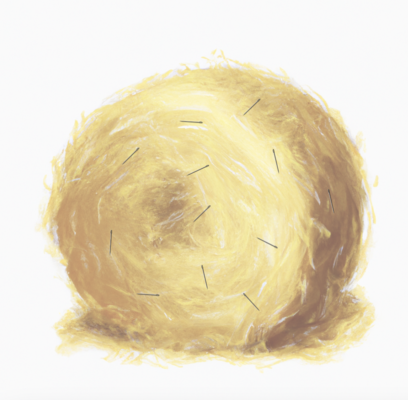

Inside that haystack are a bunch of needles: interesting companies. These aren't necessarily companies that we think are going to be the next unicorn (that would be nice, but trying to predict that through language analysis on the scanty data on pre-seed and seed-stage companies is a dark art!) Rather, we’re looking for companies that fit our geography, sector and stage, and that are aligned with our investment thesis.

To extract the needles, we use a magnet: our pipeline, made up of a bunch of machine learning models and regular software, orchestrated in the cloud. Because we focus on pre-seed and seed-stage companies, our models are mostly looking at textual information – company descriptions, articles, social media posts, etc – rather than financial information, of which there is usually very little. We use this to grab a small part of the haystack. Ideally, this is a strong magnet, capturing lots of needles, but it’s not perfect. We still end up with a lot of hay.

This small selection of needles and hay all ends up in our bucket. You can think of it like the fixed time budget our investment team has to actually look through new opportunities. We hand this over to our investors. They manually look through everything in the bucket, find the needles, and report back on the hay so we can fine tune the models for each source.

That’s how our sourcing and screening system works in a nutshell (or haystack). Obviously, you want just a bucket full of needles, but that’s never going to happen. So how do we improve it?

A bigger haystack

Now, the haystack we start with is only a small sample of all the companies out there. There’s a lot of field left to explore. So we could tap into more sources to increase the size of the haystack – and, hopefully, the number of needles – we end up with each week. Ideally, we’re hoping to get as close to complete coverage as possible.

How do we do that? Largely through feedback from our investment team. If they find an interesting company that our sourcing didn’t pick up on, we know that the pipeline missed it – either because it was a false negative (we incorrectly left a needle in the haystack), or because it was never part of the haystack to begin with. We call these missed opportunities. We ask the investor to tell us how they found the company, and then we figure out if we can add that source to our sourcing engine, whether that’s scraping a new website or tapping data from another API.

This is how we found that Twitter was such a good source. Early stage companies have little to no online presence: no financial information, no website, no Crunchbase page or TechCrunch article. But the founders usually have Twitter, so we can parse what they tweet and what other content they post to gauge if they seem like a good fit for us.

However, if we focus too much on making a big haystack – say we add a lot of new sources with a low signal to noise ratio (ie, lots of irrelevant companies) – it makes it difficult for a magnet to find the needles, no matter how good it is.

A stronger magnet

We can also improve our screening and evaluation – making a stronger magnet that finds more needles in the same amount of hay.

This is where precision and recall come in.

Precision and recall are performance metrics for machine learning models. They are two different measures of relevance, so you can judge how well suited your model is to the task, and how you can improve it. In our case, they help us judge how many interesting companies our magnet is giving us.

Recall is a measure of how many relevant items the model detected out of the sample. You calculate it by dividing the number of true positives by the number of relevant items in the sample. So out of all the needles in the haystack, how many ended up in our bucket? For example, there were 12 needles in our haystack, and our magnet picked out 4 of them. So the recall is 4/12 = 33%.

The precision of a model describes how many of the detected items are actually relevant. You calculate it by dividing the true positives by overall positives. So what proportion of our bucket is needles? For example, there are 4 needles in the bucket and 12 hay straws. So the precision is 4/(4+12) = 25%.

A higher recall means that the model returns most of the relevant results (whether or not irrelevant ones are also returned). A higher precision means that the model returns a higher proportion of relevant results.

The trick is to find a model that strikes the right balance between the two.

Tipping the balance

If we focus too much on maximising the number of needles in the bucket, we can achieve close to 100% precision (a bucket with nothing but needles), but we end up with a model that’s too strict. It would struggle to fill the bucket, so our investors would have very few new opportunities to follow up on that week, and it would reject a lot of good companies just because they don’t quite reach the threshold.

If we focus too much on recall, gathering as many of the needles as possible from the haystack, we will start letting in lots of hay straws as well. We end up with a looser model that overfills the bucket. There might be a lot of good stuff in there, but the investment team doesn’t have the time to pick through it all.

It’s a compromise, then, between two mistakes: do you use up more of the investor’s time by making them look through more companies (because your model was too lenient), or risk missing out on really good companies (because your model was too strict).

VC investment returns historically follow the power law distribution. That means that, typically, just a handful of successful investments drive the bulk of a fund’s returns. So we don’t have to find all the successful companies in the world, but out of the few investments that we do make, some better turn out to be successful. This suggests that in our case we should focus on getting high precision. Ultimately, which combination of precision and recall we choose depends on the preferences of our investment team. If they have spare capacity to review more deals, we can show them more, thereby increasing recall (the chance of finding an additional needle in the haystack) and lowering precision.

Part of the way we find this balance is by using a different magnet for each haystack. In other words, we have the same model across all sources, but we use a different threshold for each one. For each company, our model returns a number between zero and one – the higher the number, the more likely it is that the company is a good fit for us. From a high quality, curated source, we might allow in all companies scoring 0.6 or above. But for a noisier source, we might only allow in companies that hit 0.95 or above.

We also rely on feedback from our investment team. We track how our pipeline’s doing, breaking down the results into companies the pipeline found that we then invested in, ones our investors rejected, and ones that our investors found but the pipeline missed. And by marking the rejected companies with a specific reason – wrong sector, bad founder fit, poor thesis fit, etc – we can see exactly where the model needs improving. That way we’re constantly developing stronger magnets: models that have both higher precision and higher recall than previous versions.

An open field

We only started running this machine learning pipeline in April, and it’s been a game-changer. Not only can it find companies that investors might never have looked at, it can surface them earlier. It helps our investment team find founders that are a good fit and be the first to talk to them.

So, in part, this data-driven approach is about efficiency. It removes the manual, time-intensive work for investors, allowing them to focus on the aspects that humans are better at – meeting and forming relationships with founders. And it allows more comprehensive coverage to help find best-fit opportunities. But it’s also a way to make VC more inclusive: finding talent wherever it is, and finding it early.

If you’re interested in what we’re building, get in touch!